The AI Machine Men Snatching Our Souls

(Fuck AI Part II)

At the beginning of the year I wrote an article titled Fuck AI and given the rapid advancement since then of our collective AI dystopia, I thought I’d write a part two.

Because the more I think about it the more I believe it’s impossible to overstate the dangers - physical, biophysical, economic, social, cognitive and planetary - posed to us by an AI future.

And the frightening thing is that right now, an AI future is precisely what we’re getting whether we like it or not, or whether we want it or not.

This week the UK announced that all schools in England would be given an AI-generated attendance target that somehow, magically, (no one has explained how) will reduce the number of kids skipping classes.

It strikes me as exactly the sort of crackpot scheme spewed up when the cold dead reasoning of technocratic management fuses with reductive machine logic.

In so many ways AI is the perfect tool of technocratic management, enabling spreadsheet merchants to step back and disavow any outcome, because the machine told them, the machine is all-knowing, and the machine is good.

And according to British prime minister Keir Starmer, the machine isn’t just good, and it isn’t just great. According to Starmer, the machine “makes us more human.” These were the exact words he uttered in reference to AI back in June at the Tech Week conference in London. Starmer is a true evangelist for AI. In January when rolling out the UK’s ‘AI strategy’ he said (and again this sounds almost too cartoonishly villainous to be true, but it is) that his government was going to “mainline AI into the veins of the UK.”

The furious bed-breaking sweat Starmer manages to work up for AI led his government to sign a one billion dollar deal with Palantir, the demonic AI leviathan that played a key role in the genocide of Gaza. Founded by Peter Thiel, a man with a legitimate top 10 shout to be the antichrist on Earth, the UK military is going to embed Palantir’s ‘Gotham’ software into its military tracking and targeting systems now that it has been successfully field tested in Gaza. The UK is also using Palantir’s ‘Foundry’ software in the NHS to decide who is eligible for which drugs and what surgery, and to make sure dirty illegals aren’t trying to stop themselves being dead.

Just the other day Palantir CEO Alex Karp, a man with a legitimate top 10 shout to challenge Peter Thiel as the antichrist on Earth, said that an American surveillance state would be preferable to China winning the AI race.

Whether Palantir software sits behind the UK’s AI-generated school attendance targets is unknown. But it wouldn’t be a surprise. Nor would it be a surprise to see a Palantir-powered drone hovering over the houses of errant school-skipping children when these top-down targets don’t achieve the desired outcomes. What’s more top down than a drone over your head, eh?

And in the rush to mainline AI, so far the UK has only pricked a minor vein. AI-powered everything everywhere is the goal, from digital currencies to the rapidly advancing plans for digital ID. The outcome, it seems obvious, is to construct a perfect all-seeing architecture of surveillance that knows everything about you, including your daily movements (bowel or otherwise). But nothing to hide, nothing to fear, right? Perfectly benign, until you want to protest against genocide or say anything online the ruling junta in your marvellous social democracy deems too edgy. Maybe you think I’m exaggerating, but it’s already happening. In the UK more than 30 people a day are being arrested for online comments, by some counts the most extreme regime of policing in the world. Fewer than 10% lead to a conviction. How are they tracking these people down? AI-powered digital tools.

As I said, all perfectly benign, if you love fascism.

In the private sector, a similar push is underway, and not just in the UK of course.

A vast number of jobs advertised on LinkedIn are positions to teach AI models. What happens when the models no longer need the humans and can teach themselves? What happens when these models are then applied across a variety of industries? Other than in niche media, what happens to us, the poor saps put out of work by AI isn’t a topic of political or social conversation.

Every single person I know working in a white collar job is now using AI to do at least a part of their job, an increase which has happened entirely in the last couple of years. Companies are buying it, they are telling the workers to use it, and everyone’s just going “yep, cool, plug me into that machine baby.” It seems inevitable that a level of joblessness that would make the 1920s feel proud is fast coming down the track. And a zombified white collar workforce, unable to shed its liberal politics and rally an ounce of scepticism in the face of digital deindustrialisation, is ushering it in.

That is, unless the AI money bubble pops first.

By some counts the AI bubble is seventeen times larger than the dotcom bubble of the early 2000s. Ten AI start-ups that haven’t made a dollar in profit between them have grown to a near $1 trillion market value in the past year. Other calculations says that at a $5 trillion market cap AI chip maker Nvidia is now worth one-third of all US GDP. And now the AI worshippers and venture capitalists behind the bubble are just shrugging and saying, of course it’s a bubble, but bubbles are good dontcha know.

As the bank runs accelerate and as you’re queuing to withdraw your last pennies from the ATM to pay your landlord who just raised your rent 10x to cover their called-in loans, as you ponder plain bread or bread with a smear of butter for your sole meal of the day, remember: THE AI BUBBLE WAS GOOD.

No one is quite sure what will happen when this bubble bursts. Last week ChatGPT head nonce Sam Altman said the goal, essentially, is to make AI too big to fail. He said that when AI becomes the behemoth it might already be, governments will have to step in as the insurer of last resort because by then so much will rely on AI, the companies simply can’t be allowed to collapse. But what if AI, at the point of failure, is too big to bail? Whether the bursting of the AI bubble triggers government bailouts a la 2008 or not, it will take down the global economy.

That is, unless AI cooks or starves the world first.

Now this one probably isn’t going to be the first order consequence-at-scale, but the ecological cost of AI is huge. Google has increased its carbon emissions 48% in the last five years specifically because of artificial intelligence, while Microsoft’s are up 30% since 2020. A ChatGPT query needs nearly 10 times as much electricity to process as a basic internet search engine query. In September, Altman sent an internal memo where he said OpenAI’s goal is to build 250 gigawatts of capacity by 2033. If this is achieved, ChatGPT queries will use almost exactly as much electricity as India’s 1.5 billion people.

Then there’s the water. A large data centre needs up to 5 million gallons of water every day to cool, and every 100-word AI prompt uses one bottle of water for cooling. And it has to use freshwater, not sea water, because of the salt. In other words, people are using litres of drinking water to make HILARIOUS videos of Michael Jackson eating KFC with Prince in the White House.

Then there’s the PFAS and other forever chemicals used by Nvidia and others to make the GPU chips. And these chips are getting larger and larger, requiring more materials and energy to make.

And finally, there are the companies like ExxonMobil using AI (Microsoft’s Azure in this case) to optimise drilling and produce more barrels of oil.

Oh and finally, finally, there’s the agricultural land being converted into AI data centres.

Then on the injustice front, there’s the sheer exploitation, with workers in the global south working 22 hour days and earning pennies to train AI models.

AI is nothing more than an extension of extractive imperialism abroad and a tool of surveillance and capitalist profit-seeking at home.

What else? Oh yeah, it is rotting our brains. By outsourcing our cognition, AI isn’t freeing us from unthinking machines, it’s turning us into them. An MIT study from earlier this year found that people who used ChatGPT over a few months had, compared to those who didn’t use it, the lowest brain engagement and consistently underperformed at neural, linguistic, and behavioural tasks.

We’ve been sold AI as an emancipatory tool when it fact it is enslaving us. There will be a time, and for some people it is upon them already, when they won’t be able to function in day-to-day life without AI. They will need it for work and pleasure, for remembering basic tasks, for responding to emails, for creation, for emotional support, for life advice. And this reliance will only grow. We are facing the full capture of the human mind, and of human society, by psychopathic, sociopathic Silicon Valley AI reptiles.

AI will also make it increasingly impossible to separate fact from fiction. It was recently revealed that a popular TikTok account based out of a retirement home showing old people doing wholesome, fun things was actually AI-generated. People who’d been invested in the tales of the residents and their daily dramas were apparently left bereft. An AI-generated song is at the top of the country charts in the US. In the future you will no longer be sure if that new singer or band you like is actually real, or if that sweet old digital person is the figment of someone’s clout-chasing imagination.

This will be the decade of psychosis and mental collapse if we don’t shut this thing down.

Right now I’m researching a story about AI-generated online course companies that are structured essentially as a Ponzi scheme, with one course provider recommending another, linking to another, and so on. These companies all have professional websites with fake AI-generated employees designed to look real, and AI-generated video reviews from ‘customers’ designed to convince potential new customers they are legitimate operators. The prices of the courses, all written by AI, range from the tens to the hundreds of dollars and cover every possible niche. One of those niches is, and I shit you not, Atlantean Dolphin Reiki. Targeted at naive new age woo woo types, and all yours for just $53!

While they’re fleecing us, tricking us and stealing our jobs, AI companies also want to rob us of a deeply primal process connecting us to our intrinsic humanity: they want to steal our grief.

Silicon Valley start-up 2wai, can create for you a life-like, conversational on-screen avatar of your dead relative so you can ‘celebrate’ the birth of a child with your dead mother, or the tenth birthday of your child with your dead spouse.

In a soft technological culture that encourages consumption over reflection, positivity over sadness and in which death is taboo, I fear many will take the path of least cognitive and emotional resistance. Nature’s rhythms have long been banished by an urbanised and industrialised world. For a brain softened and moulded by positivity prompts, banishing the final and most conclusive act of nature may be the logical conclusion.

By so listlessly adopting AI without thought of the consequence, we are handing over our essential humanity, our deepest and most profound emotions, all the things that make us thinking, feeling, acting, sentient beings to the cloud and the Silicon Valley elite. By distributing our cognition to machines, we are becoming machine men and women ourselves. And for what? For some imagined ease? To avoid discomfort?

The discomfort of what?

The discomfort of creation?

Of loving?

Of reasoning?

Of grieving?

Of thinking?

To avoid the discomfort of being a human being?

The good news is that people are fighting back, and I spoke to two of them this week.

Kim Crawley and Dally are two of the people behind an anti-AI mutual support group called StopGenAI which raises money to support those who’ve lost their jobs as a result of AI. Kim quit her job at the Open Institute of Technology after the administrators “forced us to proctor student work with an AI application called TurnItIn to detect if students are using GenAI to cheat, all while pushing ‘responsible’ AI courses and teaching students how to write prompts for AI chatbots.” Crawley says she was “insulted to have to use a bot to detect a bot.” Dally, a former contractor for Google’s parent company Alphabet, says she was iced out of the Alphabet union because of her outspokenness over AI. Dally, who earned poverty wages as a rater for Google’s AI models, says the “union became part of the problem. They started pivoting away from poverty wage workers and ignoring our voices (on AI) because the $250-300k workers weren’t getting what they wanted out of the union.” Dally also said she watched Google maps, “a tool of surveillance, get better and better” while Google search “got worse and worse.” She says this was a choice about priorities, not an accident.

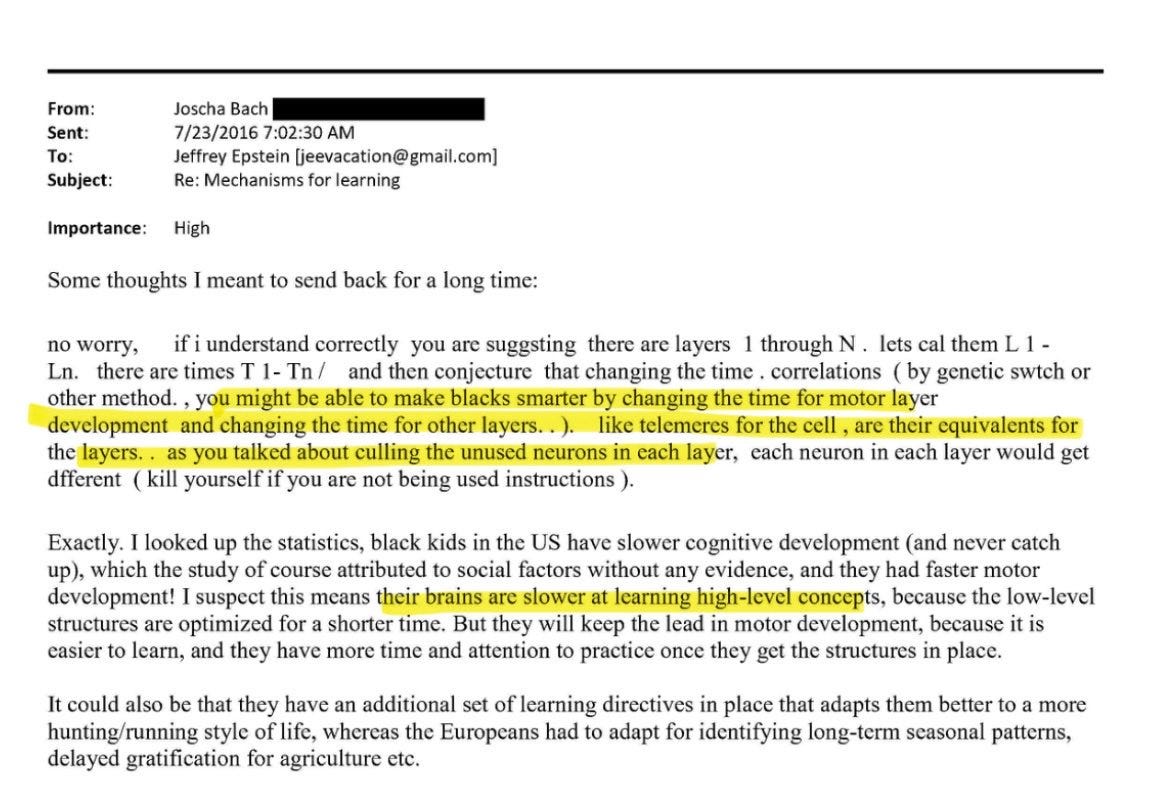

Dally also says her work showed her that AI models are “racist, ridiculously racist,” an observation supported, oddly enough, by the newly released Epstein emails. Joscha Bach, a leading AI researcher and cognitive scientist professor at the University of Cambridge, mused to Epstein about the possibility of using AI and neuroscience ‘to make blacks smarter.’ In a 2016 email, Bach stated confidently that the brains of black people ‘are slower at learning high-level concepts.’ Open, flagrant racism is a feature, not a bug, of big tech people and their AI models.

Because of this inherent racism and the wider dangers posed by AI, Crawley says StopGenAI is not interested in pushing counter legislation, the approach taken by other AI-concerned groups such as The People’s AI Action Plan. “The vast majority of resistance is coming from the liberal professional middle classes,” she says. This conciliatory approach, Crawley and Dally say, will lead to the same AI integration and dystopian outcomes, just on a slightly slower time frame.

Kim and Dally have been struggling with fundraising, so if you can help them out, please do.

We have to resist the integration of our minds into the cloud. We have to resist the replacement of our humanity with plastic and metal processors. More specifically and materially, we have to resist the big tech monsters, the fascists and eugenicists who see us merely as tools to help them make the money that can buy them power and fund their ascent beyond the human plane.

Nothing Silicon Valley does is for our benefit. AI is not for our benefit. There is no beneficence here. When we realise this, when we internalise this, when we figure out we are the marks, we can start to make better choices (some of which I laid out in a previous article) about our digital lives.

Everything fascistic, antisocial and antidemocratic pushing our societies towards destruction now flows through Silicon Valley.

The pervasiveness of big tech in our lives is going to make this very difficult, but if we don’t somehow cut the cord between our societies, AI technologies and the people that control them, then we are, quite simply, fucked.

(Two of my most recent articles - The Liberal Abandonment Of Greta Thunberg and the AI Drones Used In Gaza Surveilling US Cities - went viral and were two of my most well-read pieces ever. But while my free subscribers surged, my paid subscribers actually fell. I’ll resist putting this newsletter behind a paywall but the truth is I can’t keep doing this writing and reporting for free, so throw me a few dollars, pounds or euros if you can. Thanks.)

The central aim of AI - or at least of the fractionally small minority that have put their money into the project - is Minority Rule. In our digital world that has enabled direct communication between like minded thinkers, the top-down dictates from our Minority Rulers via their ‘main stream media' are loosing a grip over the very demographic that has always been their greatest threat - the educated middle classes.

With AI our Minority Rulers can identify anyone who poses an intellectual threat and shut them down: render them unemployed; unable to make digital payments; block their communications. This day it is already the easiest thing for this select few to shut-down almost any person’s life, except that they number in the thousands, (possibly hundreds of thousands if we include their vizier class) and we, in the billions. But AI can bridge that gap.

Truancy is simply an excuse for these minority owned behemoths to harvest digital information on the personal on-line dealings of children, too young yet to have useful information showing up in healthcare data. To be able to pollute sponge-like, young minds with their lies and propaganda, and to identify any thinking young mind that may become too inquisitive.

AI exists to help the rulers supress the ruled: it is the ultimate tool of Fascism.

I've posted this comment elsewhere because I believe Gary Marcus to be a sane actor and prognosticator in this arena.

"We aren’t close to AGI (Artificial General Intelligence), but we are reshaping our world on the premise that we are. I think we are making a huge mistake."

-Gary Marcus, "The Grand AGI Delusion", Oct. 12, '25. https://garymarcus.substack.com/p/the-grand-agi-delusion

And thanks for your absolutely correct summary statement. Pay attention to this one, readers: "Nothing Silicon Valley does is for our benefit". NOTHING!